Now this headline and it's subject could be the title and scope of a whole book (and there are a number of them available), but I wanted to get into the subject by some initial posts on the matter. And do some mobile "drag & drop" app development myself to try out a range of new app dev tools for non-programmers (see links later on).

To start with, Gartner predicts, with usual assurance, that by 2016, "40 Percent of Mobile Application Development Projects Will Leverage Cloud Mobile Back-End Services". And it doesn't stop there; "causing development leaders to lose control of the pace and path of cloud adoption within their enterprises, predicts Gartner, Inc."

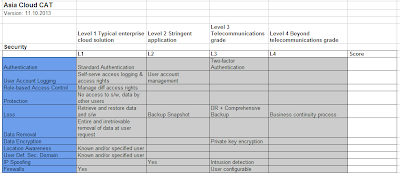

Mobile developers and apps using cloud-based platforms for their service management, processing and storage doesn't mean loosing control per ce in my opinion, just as on-prem or in-house development and deployments platforms aren't more secure or insecure than cloud based one . It boils down to security policy and culture, and how one actually adheres to them. But using a cloud based service delivery platforms for mobile apps and services seems like a no-brainer if the app in question is Internet-facing or supposed to be used by a public audience, and not just internally in an enterprise.

Using a cloud-based development and service delivery platform, i.e. a Platform as a Services (PaaS) kind of cloud platform with a wide range of support for ready-to-go development environments, databases and tools a step above basic IaaS platforms, gives a range of benefits and options, including

To start with, Gartner predicts, with usual assurance, that by 2016, "40 Percent of Mobile Application Development Projects Will Leverage Cloud Mobile Back-End Services". And it doesn't stop there; "causing development leaders to lose control of the pace and path of cloud adoption within their enterprises, predicts Gartner, Inc."

Mobile developers and apps using cloud-based platforms for their service management, processing and storage doesn't mean loosing control per ce in my opinion, just as on-prem or in-house development and deployments platforms aren't more secure or insecure than cloud based one . It boils down to security policy and culture, and how one actually adheres to them. But using a cloud based service delivery platforms for mobile apps and services seems like a no-brainer if the app in question is Internet-facing or supposed to be used by a public audience, and not just internally in an enterprise.

Using a cloud-based development and service delivery platform, i.e. a Platform as a Services (PaaS) kind of cloud platform with a wide range of support for ready-to-go development environments, databases and tools a step above basic IaaS platforms, gives a range of benefits and options, including

- A uniform development, test, piloting and launch environment and platform - one doesn't need to move code, databases, web-servers and other service delivery platforms from a closed, limited capacity dev environment to a more scalable test & pilot environment, and then onto a production set-up that supports the number of user and traffic that might come in at peak every month or whatever

- In other words, a cloud based dev, test and production environment for mobile services gives built-in load-balancing, scalability and capacity on demand that in-house platforms or IT-departments typically struggles with

- Most cloud platforms also has built in functionality for server side processing, off-loading clients or the apps form this, as well as caching and static content serving

- And most cloud service platforms has built-in security provisions, like DDoS-protection, firewalling as well as authentication services.

If one are using development platforms on Google App Engine or Mobile Backend Starter, MS Azure or Amazon AWS, one also typically get

- Access to industry-norm development environments like LAMP, RUBY or Node.js

- Authentication of users and services against the vendors shop, messaging or document store services

- Integration of log and analytics tools for mobile apps and services, for instance Google Analytics for Mobile

- Easier access to public app stores like Google Play

With smartphones and tablets now becoming the clients of choice for most users, there's a race on between the dominant and wanna-be cloud service providers to been seen as the most attractive platform for mobile developers, recently highlighted by the Google Mobile Backend Starter launch earlier on in October. Here's a list of some cloud-based mobile development platforms and services:

- Google Mobile Backend Starter

- MS Windows Azure Mobile Services

- Rackspace Mobile Stacks

- Amazon AWS Mobile Development Center

Now, about the list of mobile "drag & drop" app development tools - new post coming up shortly!

Erik Jensen, 30.10.2013