Biggest lesson from the Snowdon revelations: Social engineering and employee "activities" of various sorts is still the greatest threat to company IT security. As it always has been.

All the worlds best, strongest and well maintained network and IT security systems won't matter if it's being bypassed or circumvented by social engineering, employees or 3rd party, on-site contractors, and that holds equally well for on-site as well as cloud IT services.

In many ways the debate nowadays if cloud IT is secure or not, if a company should be making the jump or not, or maybe even pull back the initial services and data that might have done the cloud leap, resembles the discussions at CxO level 15-20 years ago whether a company should hook up to the Internet or not (beyond the IT departement and some islands of deployments): It wasn't perceived as secure, there were strange things out there and hackers might get into the LAN!

Most or nearly 100% of companies went with the Internet and gaining all that now is taken for granted re Internet, web and seamless communications services after putting in something resembling a "Internet access and usage security" policy and a big firewall. Users were allowed to browse the Internet.

Erik Jensen, 12.12.2013

All the worlds best, strongest and well maintained network and IT security systems won't matter if it's being bypassed or circumvented by social engineering, employees or 3rd party, on-site contractors, and that holds equally well for on-site as well as cloud IT services.

In many ways the debate nowadays if cloud IT is secure or not, if a company should be making the jump or not, or maybe even pull back the initial services and data that might have done the cloud leap, resembles the discussions at CxO level 15-20 years ago whether a company should hook up to the Internet or not (beyond the IT departement and some islands of deployments): It wasn't perceived as secure, there were strange things out there and hackers might get into the LAN!

Most or nearly 100% of companies went with the Internet and gaining all that now is taken for granted re Internet, web and seamless communications services after putting in something resembling a "Internet access and usage security" policy and a big firewall. Users were allowed to browse the Internet.

20 years later and many line of business units or departments has not only browsed but also put to good use a number of SaaS cloud services as well. In many cases the new IT services buyers in the HR, marketing or finances departement not knowing that the solution they had to have, and ASAP!, were in fact a cloud service delivery. Lately the IT departement has come around to the cloud IT service delivery model as well. And looks likely to continue on that path, as the business benefits are seen to outweigh the NSA or governmental listening in drawbacks (were take for granted anyway, just as for general Internet traffic and service usage by most pragmatic companies and network security managers).

2 additional factors are that if you aren't doing cloud computing or service delivery, someone else certainly will still be doing it and increasingly getting better at it, while you risk being stuck with on-site service model and lead times. And, secondly, it's not as if having everything behind that big firewall doesn't carry some risks as well, be it on human, social or system level...

This pragmatic and take appropriate measures approach were highlighted in a recent IDC survey, "2013 U.S. Cloud Security Survey" (Sep 2013) of IT executives in North America and Europe, loosely summarized as "yes, there are security and surveillance concerns, but the economic benefits and increased business agility outweighs security concerns".

What are the measures that can be taken by most companies to overcome cloud security concerns and issues?

A new infographic by Sage highlights the first steps that should be taken by anyone, for any IT solution really:

What are the measures that can be taken by most companies to overcome cloud security concerns and issues?

A new infographic by Sage highlights the first steps that should be taken by anyone, for any IT solution really:

- Establish the IT and business security policy for IT in general and the the IT solution in question

- Train your employees. On the IT policy and the IT solution in question, best practises etc.

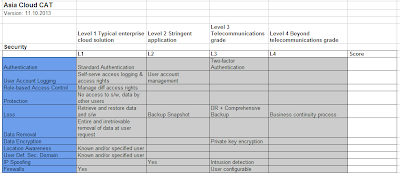

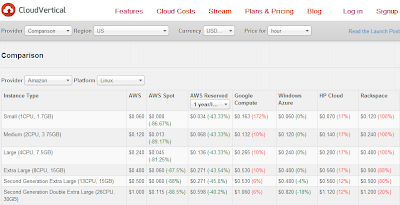

- Assess business needs, i.e. what business data needs to be where, accessible by whom and how. With what kind of service levels.

- Choose the right supplier and service for number 3

Erik Jensen, 12.12.2013